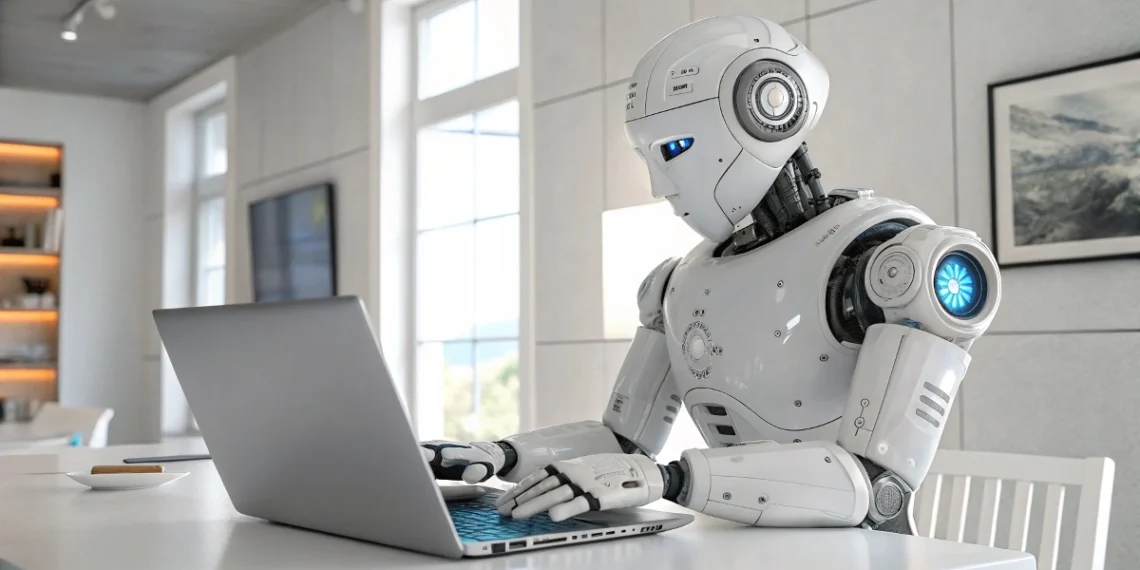

AI techniques now make life-altering choices day by day, however who’s accountable when issues pass unsuitable?

Corporations deploy algorithms that come to a decision who will get loans, hospital therapy, or jail time, but everybody issues in different places when hurt happens.

“The device did it,” they declare. This moral shell recreation – “awareness laundering” – shall we organizations outsource ethical choices whilst heading off responsibility.

The results? Biased facial popularity wrongly arrests blameless other people. Lending algorithms deny loans to certified minorities. Predictive policing intensifies racial profiling.

We wish to acknowledge this bad development prior to algorithmic ethical brokers totally change human judgment and human accountability.

The Upward push of Synthetic Ethical Brokers (AMAs)

AI techniques now act as decision-makers in contexts with main moral implications, functioning as stand-ins for human ethical judgment.

Origins and Goals of AMAs

The hunt to construct machines in a position to moral reasoning began in educational labs exploring whether or not ethical rules may well be translated into code.

Pc scientists started with easy rule-based techniques supposed to forestall hurt and step by step developed towards extra subtle approaches.

Army analysis businesses supplied considerable investment, specifically for techniques that might make battlefield choices inside criminal and moral obstacles.

Those early efforts laid the groundwork for what would grow to be a wider technological motion.

Tech companies quickly identified each the sensible packages and advertising possible of “moral AI.”

Between 2016 and 2020, maximum main tech firms established AI ethics departments and revealed rules.

Google shaped an ethics board. Microsoft launched AI equity pointers. IBM introduced tasks excited about “relied on AI.”

Those company strikes signaled a shift from theoretical exploration to industrial construction, with techniques designed for real-world deployment quite than educational experimentation.

What began as a philosophical inquiry step by step remodeled into era now embedded in vital techniques international.

These days, AMAs resolve who receives loans, hospital therapy, activity interviews, or even bail.

Each and every implementation represents a switch of ethical accountability from people to machines, continuously with minimum public consciousness or consent.

The era now makes life-altering choices affecting tens of millions of other people day by day, running underneath the basis that algorithms can ship extra constant, impartial moral judgments than people.

Justifications for AMA Deployment

Organizations deploy AMAs the usage of two major justifications: potency and intended objectivity. The potency argument makes a speciality of velocity and scale.

Algorithms procedure hundreds of instances consistent with hour, dramatically outpacing human decision-makers.

Courts put into effect threat overview equipment to take care of case backlogs. Hospitals use triage algorithms all over useful resource shortages.

Banks procedure mortgage packages routinely. Each and every instance guarantees quicker effects with fewer sources—a compelling pitch for chronically underfunded establishments.

The objectivity declare suggests algorithms steer clear of human biases. Corporations marketplace their techniques as transcending prejudice via mathematical precision. “Our set of rules doesn’t see race,” they declare. “It most effective processes information.”

This narrative appeals to organizations fearful about discrimination court cases or unhealthy exposure. The gadget turns into a handy answer, supposedly unfastened from the prejudices that plague human judgment.

This declare supplies each advertising attraction and criminal coverage, providing a technique to outsource ethical accountability.

In combination, those justifications create robust incentives for adoption, even if proof supporting them stays skinny.

Organizations can concurrently reduce prices and declare moral development—an impossible to resist aggregate for executives dealing with finances constraints and public scrutiny.

The potency good points are continuously measurable, whilst the moral compromises stay hidden at the back of technical complexity and proprietary algorithms.

This imbalance creates stipulations the place AMAs unfold all of a sudden regardless of critical considerations about their accidental penalties and embedded biases.

Evaluations of AMAs

Critics spotlight elementary flaws in each the idea that and implementation of man-made ethical brokers. Probably the most considerable worry comes to biased replica.

AI techniques be told from historic information containing discriminatory patterns. Facial popularity fails extra continuously with darker pores and skin tones. Hiring algorithms prefer applicants matching current worker demographics.

Healthcare techniques allocate fewer sources to minority sufferers. Those biases don’t require specific programming—they emerge naturally when algorithms be told from information reflecting societal inequalities.

The neutrality declare itself represents some other vital failure level. Each and every set of rules embodies values via what it optimizes for, what information it makes use of, and what constraints it operates underneath.

When Fb prioritizes engagement, it makes a moral selection valuing consideration over accuracy. When an set of rules determines bail according to “flight threat,” the definition of threat itself displays human price judgments.

The appearance of neutrality serves robust pursuits by way of obscuring those embedded values whilst keeping up current energy buildings.

In all probability maximum troubling is how AMAs permit organizations to evade responsibility for ethical choices. By way of attributing alternatives to algorithms, people create distance between themselves and results.

A pass judgement on can blame the danger ranking quite than their judgment. A financial institution can level to the set of rules quite than discriminatory lending practices.

This diffusion of accountability creates what philosophers name a “accountability hole” the place nobody—neither people nor machines—bears complete ethical responsibility for choices that profoundly impact human lives.

Mechanisms of Awareness Laundering

Awareness laundering operates via explicit tactics that difficult to understand moral accountability whilst keeping up destructive practices.

Bias Amplification

AI techniques don’t simply reproduce current biases—they continuously enlarge them via comments loops.

This amplification occurs when algorithms skilled on biased information make choices that generate extra biased information, making a worsening cycle.

Police departments the usage of predictive algorithms skilled on traditionally biased arrest data direct extra officials to over-policed neighborhoods.

Extra police presence results in extra arrests for minor offenses, which confirms and strengthens the set of rules’s center of attention on the ones spaces in long run predictions.

Scientific algorithms skilled on historic remedy data soak up a long time of healthcare inequities.

One broadly used device for allocating care sources systematically undervalued Black sufferers’ wishes as it used previous healthcare spending as a proxy for clinical necessity.

Since Black American citizens traditionally won much less healthcare spending because of systemic boundaries, the set of rules incorrectly concluded they wanted much less care.

This technical selection amplified current inequalities whilst showing scientifically legitimate.

Monetary techniques use credit score histories that replicate historic redlining and discrimination. Other people from communities that banks traditionally have shyed away from now lack the credit score historical past had to ranking neatly on algorithmic checks.

The algorithms don’t wish to know an applicant’s race to successfully discriminate—they just wish to know components that correlate with race, like cope with historical past or banking patterns.

Each and every cycle of algorithmic decision-making magnifies those disparities whilst wrapping them in mathematical authority that makes them tougher to problem.

Ethics Washing

Organizations more and more use ethics language with out considerable adjustments to their practices. This manner lets them seem accountable whilst heading off significant reform.

Main tech firms submit impressive-sounding AI ethics rules with out enforcement mechanisms. Google guarantees to not construct destructive AI whilst growing army packages.

Fb commits to equity whilst its algorithms unfold incorrect information, harming marginalized communities. The distance between said values and precise merchandise finds the superficial nature of many company ethics commitments.

Ethics forums and committees continuously serve as as window dressing quite than governance our bodies. They most often lack the authority to dam product launches or trade offers that violate moral pointers.

Google’s Complicated Era Exterior Advisory Council dissolved after only one week amid controversy. Different firms handle ethics groups with minimum affect over trade choices.

Those buildings create the illusion of moral oversight with out difficult benefit motives. When moral issues battle with trade objectives, ethics most often lose.

Corporations undertake ethics language whilst actively preventing in opposition to significant legislation. They argue that voluntary pointers suffice whilst lobbying in opposition to criminal constraints.

This manner lets them keep watch over the narrative round AI ethics whilst heading off responsibility. The result’s a device the place ethics turns into a business plan quite than an operational constraint.

The language of accountability serves to offer protection to company pursuits quite than the general public just right—the essence of awareness laundering.

Obfuscation of Responsibility

AI techniques create planned ambiguity about who bears accountability for destructive results. When algorithms motive hurt, blame falls into an opening between human and gadget decision-making.

Corporations declare the set of rules made the verdict, now not them. Builders say they only constructed the instrument, now not determined methods to use it.

Customers declare they only adopted the device’s advice. Each and every birthday celebration issues to the opposite, making a “accountability hole” the place nobody is absolutely responsible.

Organizations exploit this ambiguity strategically. Human sources departments use automatic screening equipment to reject activity candidates, then inform applicants “the device” made the verdict.

This manner shields HR execs from uncomfortable conversations whilst permitting discriminatory results that could be unlawful if finished explicitly by way of people.

The technical complexity makes this deflection extra convincing, as few candidates can successfully problem algorithmic choices.

Criminal techniques fight to deal with this diffusion of accountability. Criminal techniques fight to carry somebody responsible as a result of AI blurs the road between who decided and who will have to take accountability for it.

Who bears accountability when an set of rules recommends denying a mortgage—the developer who constructed it, the information scientist who skilled it, the executive who applied it, or the establishment that earnings from it?

This uncertainty creates secure harbors for organizations deploying destructive techniques, letting them get pleasure from automation whilst heading off its moral prices.

Case Research in Unethical AI Deployment

Throughout sectors, AI techniques allow unethical practices whilst offering technical quilt for organizations.

Monetary Programs

Banks and monetary establishments deploy AI techniques that successfully exclude marginalized communities whilst keeping up a veneer of goal threat overview.

Anti-money laundering algorithms disproportionately flag transactions from positive areas as “excessive threat,” growing virtual redlining.

A small trade proprietor in Somalia would possibly to find authentic transactions robotically not on time or blocked as a result of an set of rules deemed their nation suspicious.

Whilst showing impartial, those techniques successfully reduce complete communities off from the worldwide monetary device.

Lending algorithms perpetuate historic patterns of discrimination whilst showing mathematically sound.

Pew Analysis Heart research persistently display Black and Hispanic candidates get rejected at upper charges than white candidates with an identical monetary profiles.

The algorithms don’t explicitly believe race—they use components like credit score ranking, zip code, and banking historical past. But those components strongly correlate with race because of a long time of housing discrimination and unequal monetary get entry to.

Lenders shield those techniques by way of pointing to their statistical validity, ignoring how the ones statistics replicate historic injustice.

Credit score scoring techniques compound those problems by way of growing comments loops that entice marginalized communities. Other people denied loans can’t construct a credit score historical past, which additional reduces their ratings.

Communities traditionally denied get entry to to monetary services and products stay excluded, now via algorithms quite than specific insurance policies.

The technical complexity of those techniques makes discrimination tougher to end up and cope with, whilst they produce results that might violate truthful lending rules if finished explicitly by way of people.

This laundering of discrimination via algorithms represents a central case of awareness laundering.

Training

Tutorial establishments more and more deploy AI techniques that undermine studying whilst claiming to support it. Automatic essay scoring systems promise potency however continuously praise formulaic writing over authentic considering.

Proctoring tool claims to make sure take a look at integrity however creates invasive surveillance techniques that disproportionately flag scholars of colour and the ones with disabilities.

Each and every case comes to buying and selling elementary tutorial values for administrative comfort, with scholars bearing the prices of those trade-offs.

The upward thrust of generative AI creates new demanding situations for significant studying. Scholars more and more use AI textual content turbines for assignments, generating essays that seem authentic however require minimum highbrow engagement.

Whilst educators fear about educational integrity, the deeper worry comes to talent construction.

Scholars who outsource considering to machines might fail to expand a very powerful talents in analysis, research, and authentic expression—abilities very important for significant participation in democracy and the team of workers.

Awareness laundering happens when establishments body those technological alternatives as tutorial enhancements quite than cost-cutting measures.

Universities advertise “customized studying platforms” that continuously merely observe scholar conduct whilst turning in standardized content material.

Okay-12 colleges tout “adaptive studying techniques” that continuously quantity to digitized worksheets with information assortment features.

The language of innovation mask the alternative of human judgment with algorithmic control, continuously to the detriment of authentic studying.

Felony Justice

The prison justice device has embraced algorithmic equipment that reproduce and enlarge current inequities.

Predictive policing algorithms direct patrol sources according to historic crime information, sending extra officials to neighborhoods with traditionally excessive arrest charges, most often low-income and minority communities.

Officials flood those neighborhoods and price ticket behaviors they’d forget about in wealthier spaces, catching small infractions that wouldn’t draw consideration in different portions of the city.

This creates a comments loop: extra policing results in extra arrests, which confirms the set of rules’s prediction about the place crime happens.

Chance overview algorithms now affect choices about bail, sentencing, and parole. Those techniques assign threat ratings according to components like prison historical past, age, employment, and community.

Judges use those ratings to resolve whether or not defendants stay in prison prior to trial or obtain longer sentences.

Those equipment continuously are expecting upper threat for Black defendants than white defendants with an identical backgrounds.

The human penalties are critical: other people detained prior to trial continuously lose jobs, housing, and custody of kids, although in the long run discovered blameless.

What makes those techniques specifically problematic is how they launder bias via mathematical complexity.

Police departments can declare they’re merely deploying sources “the place the information presentations crime occurs,” obscuring how the ones information patterns emerge from discriminatory practices.

Courts can level to “evidence-based” threat ratings quite than doubtlessly biased judicial discretion. The algorithmic nature makes those patterns tougher to problem as discriminatory, whilst they produce disparate affects.

The technical veneer supplies each criminal and mental distance from ethical accountability for those results.

Moral and Philosophical Demanding situations

The delegation of ethical choices to AI techniques raises profound questions on accountability, human company, and the character of moral reasoning.

- Ethical Accountability in AI Programs: The query of who bears accountability when AI reasons hurt stays unresolved. Builders declare they simply constructed equipment, firms level to algorithms, and customers say they adopted suggestions. This accountability shell recreation leaves the ones harmed with out transparent recourse. Criminal techniques fight with this ambiguity, as conventional legal responsibility frameworks suppose transparent causal chains that AI techniques intentionally difficult to understand. The ensuing responsibility vacuum serves robust pursuits whilst leaving susceptible populations uncovered to algorithmic harms with out significant paths to justice or reimbursement.

- Human Judgment vs. Algorithmic Automation: Human moral reasoning differs basically from algorithmic processing. We believe context, follow empathy, acknowledge exceptions, and weigh competing values according to explicit cases. Algorithms practice fastened patterns irrespective of distinctive eventualities. This mismatch turns into vital in complicated situations the place judgment issues maximum. A pass judgement on would possibly believe a defendant’s lifestyles cases, whilst an set of rules sees most effective variables. A physician would possibly weigh a affected person’s needs in opposition to clinical signs, whilst an set of rules optimizes just for measurable results. This hole between human and gadget reasoning creates critical moral shortfalls.

- The Delusion of AI “Awareness”: Corporations more and more anthropomorphize AI techniques, suggesting they possess company, working out, or ethical reasoning features. This narrative serves strategic functions: attributing human-like qualities to machines is helping shift accountability clear of human creators. Phrases like “AI ethics” and “accountable AI” subtly recommend the era itself, quite than its creators, bears ethical duties. This linguistic sleight-of-hand distorts public working out. AI techniques procedure patterns in information; they don’t “perceive” ethics or possess awareness. Attributing ethical company to algorithms obscures the human alternatives, values, and pursuits embedded inside those techniques.

Mitigating Awareness Laundering

Addressing awareness laundering calls for intervention at technical, coverage, and social ranges to revive human company and responsibility.

- Technical Answers: A number of technical approaches can assist cut back algorithmic harms. Explainable AI makes resolution processes clear quite than opaque black containers. Algorithmic affect checks review possible harms prior to deployment. Various coaching information is helping save you bias amplification. Steady tracking detects and corrects rising issues. Those technical fixes, whilst vital, stay inadequate on my own. They will have to perform inside more potent governance frameworks and be guided by way of transparent moral rules. Technical answers with out corresponding responsibility mechanisms continuously grow to be window dressing quite than significant safeguards.

- Coverage and Governance: Regulatory frameworks will have to evolve to deal with algorithmic harms. The EU AI Act provides one style, classifying high-risk AI packages and requiring higher oversight. Obligatory algorithmic affect checks prior to deployment can save you foreseeable harms. Unbiased audits by way of 3rd events can check claims about equity and accuracy. Criminal legal responsibility reforms will have to shut responsibility gaps between builders, deployers, and customers. Civil rights protections want updating to deal with algorithmic discrimination in particular. Those governance approaches will have to center of attention on results quite than intentions, retaining techniques in charge of their precise affects.

- Sociocultural Shifts: Lengthy-term answers require broader adjustments in how we perceive era’s position in society. AI literacy will have to prolong past technical wisdom to incorporate moral reasoning about technological techniques. Interdisciplinary collaboration between technologists, ethicists, sociologists, and affected communities will have to tell each design and governance. The parable of technological neutrality will have to get replaced with popularity that every one technical techniques embrace values and serve pursuits. Public discourse will have to query who advantages from and who bears the dangers of automatic techniques. Most significantly, we will have to keep human ethical company quite than outsourcing moral choices to machines.

Bored with 9-5 Grind? This Program May Be Turning Level For Your Monetary FREEDOM.

This AI facet hustle is specifically curated for part-time hustlers and full-time marketers – you actually want PINTEREST + Canva + ChatGPT to make an additional $5K to $10K per month with 4-6 hours of weekly paintings. It’s essentially the most robust device that’s operating at this time. This program comes with 3-months of one:1 Strengthen so there’s virtually 0.034% probabilities of failure! START YOUR JOURNEY NOW!